NVIDIA H100 NVL: A Game Changer in Accelerated Computing

The introduction of Graphics Processing Units (GPUs) revolutionised the computer industry, with NVIDIA leading the drive. The company’s latest offering, the NVIDIA H100 NVL, is a testament to its commitment to pushing the boundaries of what’s possible in accelerated computing.

Table of contents

- Unprecedented Performance and Scalability

- NVIDIA H100 NVL Supercharged Large Language Model Inference

- Enterprise AI Adoption Of NVIDIA H100 NVL

- Securely Accelerating Workloads

- Real-Time Deep Learning Inference of NVIDIA H100 NVL

- Exascale High-Performance Computing

- NVIDIA H100 NVL Accelerated Data Analytics

- Enterprise-Ready Utilization

- Built-In Confidential Computing with NVIDIA H100 NVL

- Unparalleled Performance for Large-Scale AI and HPC

- Product Specifications

- Final Thought

- Youtube Video About NVIDIA H100 NVL

- FAQ

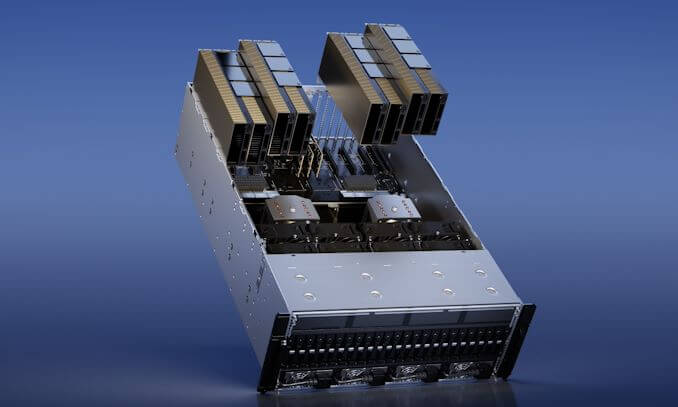

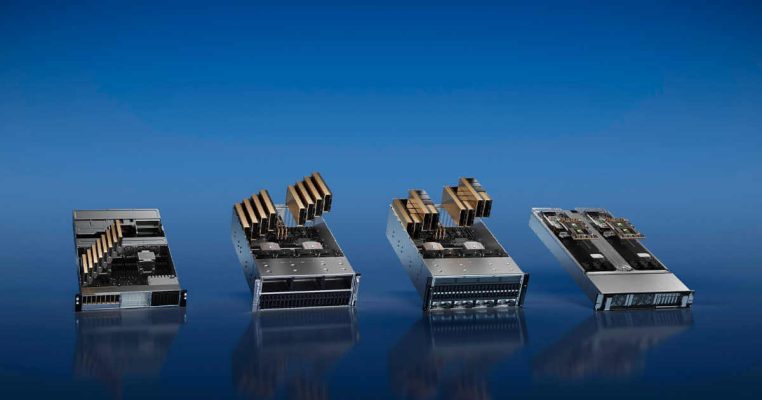

Unprecedented Performance and Scalability

The NVIDIA H100 NVL is a marvel of modern technology, offering unprecedented performance, scalability, and security.

The NVIDIA NVLink Switch System allows it to manage any workload with ease. This system enables for the interconnection of up to 256 H100 GPUs, resulting in a significant increase in accelerated computing capabilities.

Furthermore, the NVIDIA NVL has a specialised Transformer Engine. Also this engine is intended to handle trillion-parameter language models, a task that most GPUs would find difficult.

The NVIDIA H100 NVL’s combined technological breakthroughs may accelerate large language models (LLMs) by an amazing 30X over the previous generation, also making it a leader in conversational AI.

NVIDIA H100 NVL Supercharged Large Language Model Inference

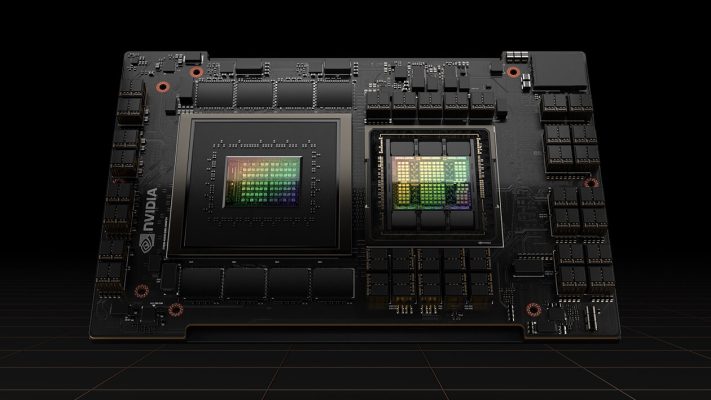

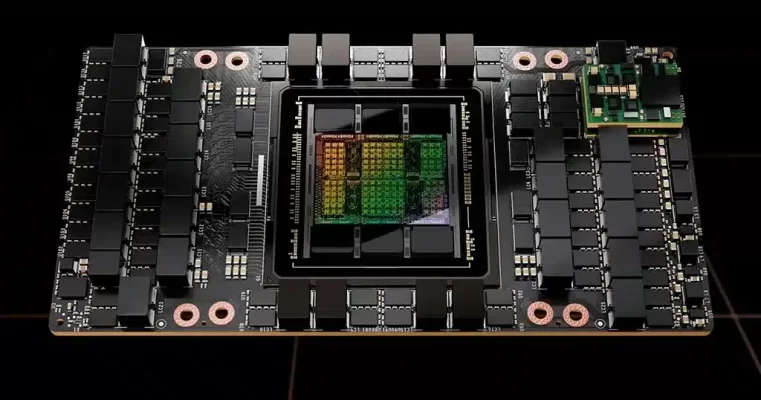

The NVIDIA H100 NVL is not just about raw power; it’s also about finesse. The NVIDIA H100 with NVLink bridge provides optimal performance for LLMs with up to 175 billion parameters by using its Transformer Engine, NVLink, and 188GB HBM3 memory.

Also this combination enables simple scaling across any data centre, ushering LLMs into the mainstream.

NVIDIA H100 NVL GPU-equipped servers can boost GPT-175B model performance by up to 12X over NVIDIA DGX A100 systems.

This boost in performance is accomplished while retaining low latency in power-constrained data centre scenarios, demonstrating the H100 NVL’s efficiency.

Enterprise AI Adoption Of NVIDIA H100 NVL

The adoption of AI in enterprises is now mainstream, and organizations need end-to-end, AI-ready infrastructure. The NVIDIA H100 NVL GPUs for mainstream servers come with a five-year subscription, including enterprise support, to the NVIDIA AI Enterprise software suite.

This package streamlines AI adoption by ensuring organisations have access to the AI frameworks and technologies required to construct H100-accelerated AI processes such as AI chatbots, recommendation engines, vision AI, and more.

Securely Accelerating Workloads

The NVIDIA H100 NVL is not just about performance and scalability; it’s also about security. The GPU is intended to safely accelerate workloads ranging from corporate to exascale.

It has fourth-generation Tensor Cores and a Transformer Engine with FP8 accuracy, allowing for up to 4X quicker training for GPT-3 (175B) models than the previous iteration.

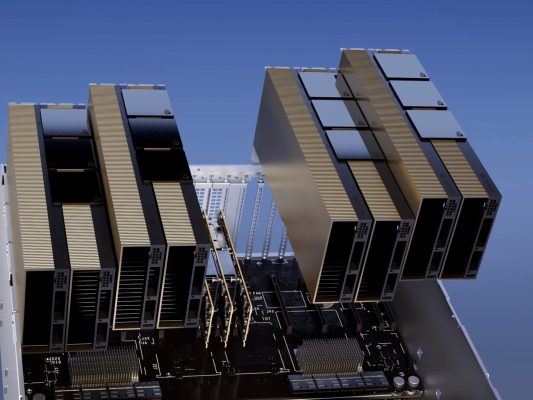

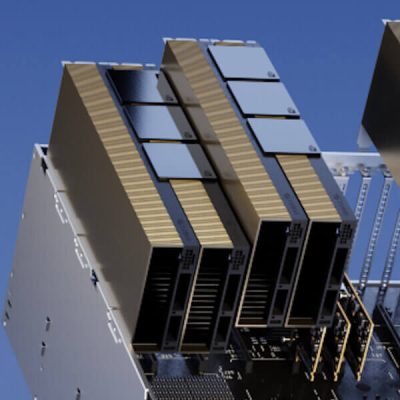

The combination of fourth-generation NVLink, which provides 900 GB/s of GPU-to-GPU interconnect; NDR Quantum-2 InfiniBand networking, which accelerates communication by every GPU across nodes; PCIe Gen5; and NVIDIA Magnum IO software enables efficient scaling from small enterprise systems to massive, unified GPU clusters.

Real-Time Deep Learning Inference of NVIDIA H100 NVL

The NVIDIA H100 NVL extends NVIDIA’s market-leading inference leadership with several advancements that accelerate inference by up to 30X and deliver the lowest latency.

Fourth-generation Tensor Cores accelerate all precisions, including FP64, TF32, FP32, FP16, INT8, and now FP8, reducing memory use and increasing performance while retaining LLM accuracy.

Exascale High-Performance Computing

The NVIDIA H100 NVL triples the floating-point operations per second (FLOPS) of double-precision Tensor Cores, delivering 60 teraflops of FP64 computing for HPC. AI-infused HPC applications may also take use of the H100’s TF32 precision to reach one petaflop of performance for single-precision matrix-multiply operations while requiring no code modifications.

NVIDIA H100 NVL Accelerated Data Analytics

The NVIDIA H100 NVL delivers the compute power—along with 3 terabytes per second (TB/s) of memory bandwidth per GPU and scalability with NVLink and NVSwitch—to tackle data analytics with high performance and scale to support massive datasets.

The NVIDIA data centre architecture, when combined with NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS, is uniquely equipped to accelerate these massive workloads with unsurpassed levels of performance and efficiency.

Enterprise-Ready Utilization

The NVIDIA H100 NVL incorporates second-generation Multi-Instance GPU (MIG) technology, which maximises GPU utilisation by securely dividing it into up to seven distinct instances.

Also with confidential computing support, the NVIDIA H100 NVL allows secure, end-to-end, multi-tenant usage, making it ideal for cloud service provider (CSP) environments.

Built-In Confidential Computing with NVIDIA H100 NVL

NVIDIA Confidential Computing is a built-in security feature of the Hopper architecture that makes the NVIDIA H100 NVL the world’s first accelerator with confidential computing capabilities.

Users may maintain the security and integrity of their data and applications while benefiting from the unrivalled acceleration of H100 GPUs.

Unparalleled Performance for Large-Scale AI and HPC

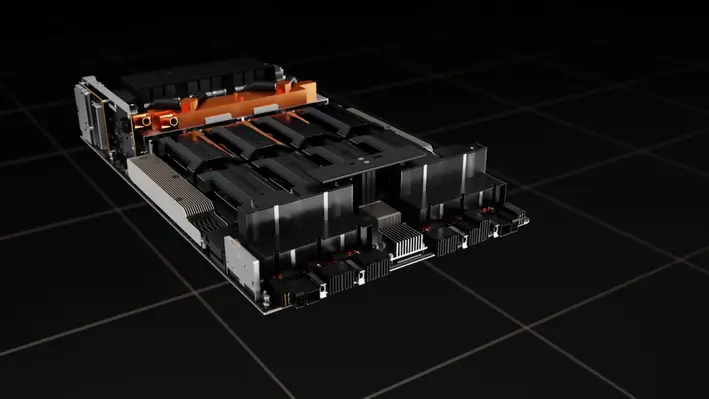

The NVIDIA Grace Hopper CPU+GPU architecture, purpose-built for terabyte-scale accelerated computing and enabling 10X greater performance on large-model AI and HPC, also will be powered by the Hopper Tensor Core GPU.

The NVIDIA Grace CPU takes use of the Arm architecture’s flexibility to produce a CPU and server architecture designed from the bottom up for accelerated computation.

The Hopper GPU is connected to the Grace CPU through NVIDIA’s ultra-fast chip-to-chip interface, which provides 900GB/s of bandwidth, which is 7X faster than PCIe Gen5.

Product Specifications

Here is a comparison table of the H100 NVL in different form factors:

| Form Factor | FP64 | FP64 Tensor Core | FP32 | TF32 Tensor Core | BFLOAT16 Tensor Core | GPU Memory | GPU Memory Bandwidth |

| H100 SXM | 34 teraFLOPS | 67 teraFLOPS | 67 teraFLOPS | 989 teraFLOPS | 1,979 teraFLOPS | 80GB | 3.35TB/s |

| H100 PCIe | 26 teraFLOPS | 51 teraFLOPS | 51 teraFLOPS | 756 teraFLOPS | 1,513 teraFLOPS | 80GB | 2TB/s |

| H100 NVL | 68 teraFLOPS | 134 teraFLOPS | 134 teraFLOPS | 1,979 teraFLOPS | 3,958 teraFLOPS | 188GB | 7.8TB/s |

Final Thought

The NVIDIA H100 NVL is a game-changer in the world of accelerated computing. Its performance, scalability, and security will transform AI, HPC, and also data analytics. H100 NVL-powered computing is the future.

The H100 NVL shows NVIDIA’s dedication to accelerated computing by supercharging big language model inference and also providing secret computing. The H100 NVL will shape computing’s future.

Youtube Video About NVIDIA H100 NVL

You May Also Like

The Benefits of Cloud Computing For Small Businesses

The 10 Best Computer Games of All Time

FAQ

Tensor Core GPU H100 NVL delivers unmatched performance, scalability, and security. The NVIDIA NVLink Switch System connects up to 256 H100 GPUs, also the Transformer Engine handles trillion-parameter language models, and secret computing is built in. It secures enterprise-to-exascale workload acceleration.

The H100 NVL with NVLink bridge optimises LLMs up to 175 billion parameters using its Transformer Engine, NVLink, and 188GB HBM3 memory. Also this combination makes LLMs mainstream by enabling data center-wide scalability.

High-performance NVIDIA AI Enterprise software simplifies AI adoption. The H100 NVL GPUs for mainstream servers come with a five-year subscription and also enterprise support. This package provides H100-accelerated AI process frameworks and tools to organisations.

The H100 NVL’s second-generation Multi-Instance GPU (MIG) technology securely partitions each GPU into seven instances to maximise GPU utilisation. Also this secures end-to-end, multi-tenant usage, making it perfect for cloud service provider (CSP) situations.

NVIDIA Grace Hopper CPU+GPU architecture accelerates large-model AI and HPC 10X. The Arm architecture allows the NVIDIA Grace CPU to construct a CPU and server architecture optimised for accelerated computation. NVIDIA’s ultra-fast chip-to-chip connection connects the Grace CPU and Hopper GPU, giving 900GB/s of bandwidth, 7X faster than PCIe Gen5.